How we reduced our API Latency by 50% using Cloudflare

Discover how we boosted speeds by switching to Cloudflare and get insights into our migration journey.

Written by

Paula Guijarro

Published on

March 15, 2023

At Xata we always look for ways to improve our performance and user experience. One way to achieve this is by reducing the latency of our API responses. In this post, we will share our experience migrating to Cloudflare and the benefits we gained.

First, let's talk about Time to First Byte (TTFB) – the time it takes for the first byte of the response to be sent back to the user. TTFB is affected by many factors, including network latency, server processing time, and the time it takes for the DNS lookup. As a general rule of thumb, most sites should strive to have a TTFB of 0.8 seconds or less.

You can’t improve what you don’t measure, so let’s see what Xata's response times were when we started working on this:

$ curl -so /dev/null https://us-east-1.xata.sh -w "| dnslookup: %{time_namelookup} \n| connect: %{time_connect} \n| appconnect: %{time_appconnect} \n| pretransfer: %{time_pretransfer} \n| starttransfer: %{time_starttransfer} \n| total: %{time_total} \n| size: %{size_download}\n"

| dnslookup: 0.144752

| connect: 0.254816

| appconnect: 0.493482

| pretransfer: 0.493587

| starttransfer: 0.610611

| total: 0.610689

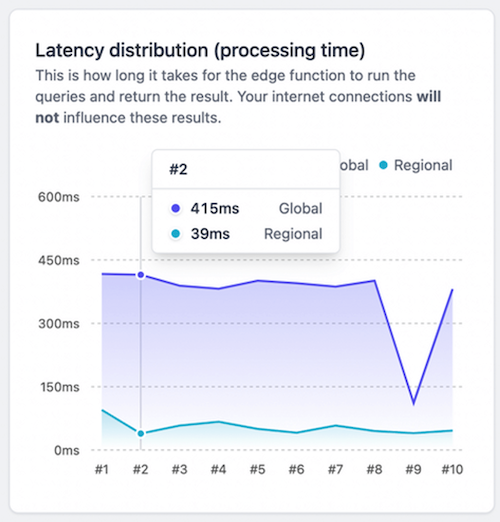

| size: 110We made further tests to have a clear picture of the current state. We’ve also used Vercel’s edge data latency app. The app performs simple queries to a specific Xata database table in two different ways:

Regional: sends 10 requests to a Vercel function that is pinned to be executed in the N. Virginia region (us-east-1). These are called “regional” requests.

Global: sends 10 requests to a Vercel function that is not pinned to a region, it picks a region close to where we run the Vercel function from – in our case, Spain (Europe).

“Global” response times are worse than “regional” response times, but digging deeper, we saw that by consistently sending requests the times decreased, but not as much as we expected. This prompted us to seek improvement, and we conducted a proof of concept to test Cloudflare’s services.

Cloudflare is a content delivery network (CDN) that provides various services designed to improve the performance and security of websites and applications. It operates a global network of servers in over 285 cities spread across the globe, ensuring that they are as close to end-users as possible.

We put Cloudflare in front of our API, and let it handle DNS resolution and TLS termination happen closer to the user. Cloudflare's DNS resolver is fast and reliable, and it caches DNS records to ensure that subsequent requests are even faster. We observed some improvement in DNS lookup times by using Cloudflare's globally distributed DNS network, from ~150ms without Cloudflare to ~10ms using Cloudflare.

When a user makes a request to our API, Cloudflare's DNS resolver directs the request to the nearest Cloudflare server. This means that the request doesn't have to travel as far, resulting in lower latency and faster TTFB times. Cloudflare's Anycast network can also further reduce latency by routing traffic to the nearest server with the lowest latency, and it uses smart routing algorithms to optimize network paths.

During the proof of concept, we also tried Cloudflare Argo Smart Routing. Argo is a service that optimizes content delivery over the Cloudflare network, using a combination of smart routing and protocol optimizations. The results showed a slight improvement in response times when using Argo, but it was not significant for our use case.

We conducted load tests to compare different configurations sending requests for 120 seconds without reusing the same connection per each request, the resume of the results is shown below:

| Transactions | Average | Longest | Shortest | |

|---|---|---|---|---|

| Cloudflare DNS and Proxy | 572 hits | 0.21s | 0.63s | 0.16s |

| Cloudflare + Argo SR | 603 hits | 0.20s | 0.49s | 0.14s |

| Cloudflare + Cloudflare LB | 571 hits | 0.21s | 1.21s | 0.16s |

| Previous DNS Configuration | 298 hits | 0.40s | 0.48s | 0.36s |

To further validate our findings, we also executed several runs of our end-to-end (E2E) tests to gather more data on the performance difference. On one side, we ran our usual tests with our default configuration, on the other side we configured a secondary domain in Cloudflare and proxied the requests to our API. The results showed that using Cloudflare configuration significantly reduced the duration of our tests. We saved on average about 60 seconds (>25%) in Xata eu-west-1 region, as shown below:

# Before

Test Files 15 passed (15) Tests 172 passed | 2 skipped (174) Start at 11:07:13 Duration 221.77s (transform 793ms, setup 2ms, collect 7.42s, tests 203.69s)

# Now

Test Files 15 passed (15) Tests 172 passed | 2 skipped (174) Start at 08:32:56 Duration 157.63s (transform 822ms, setup 2ms, collect 7.09s, tests 141.18s)We considered after this PoC that the benefits Cloudflare brought us were enough to make the change, so we migrated our production DNS to Cloudflare and switched our traffic to the Cloudflare network to proxy the requests to our API.

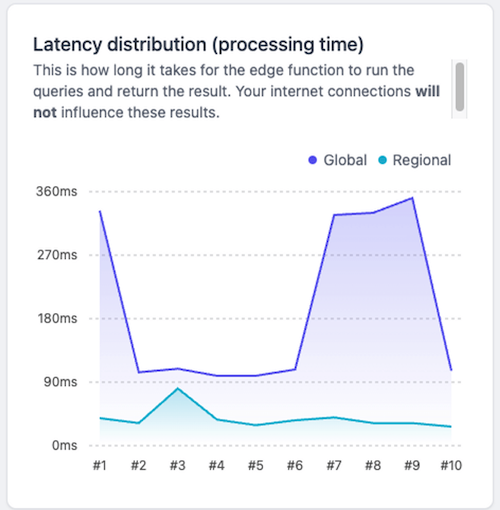

We have successfully decreased our API response times by leveraging Cloudflare's DNS and proxying the traffic through the Cloudflare network.

Our load testing results demonstrate that using Cloudflare in front of our API allowed us to improve our overall performance. Prior to using Cloudflare, our response times from our load test result were not able to go below 360ms. However, with Cloudflare, we achieved faster response times consistently, leading to a better user experience.

- Cloudflare's globally distributed DNS network improves DNS lookup times.

- Cloudflare can provide an appreciable improvement in response times when there is consistent traffic routed to our servers.

- Cloudflare offers us different features that we can activate eventually, such as Argo Smart Routing. Argo optimizes network transit time, can reduce Internet latency on average by 30%, and can dynamically select the best path for each request based on factors such as network congestion, latency, and available capacity.

- Cloudflare does not always improve Time To First Byte (TTFB) of cold connections, especially when traffic is low.

- When the request originates near the servers, the improvement in response time may not be noticeable.

Xata already uses Cloudflare Workers for Platform to host our serverless functions and some new exciting features coming soon to Xata will also use Cloudflare services. Ready to experience our fast response times and start building something amazing on Xata? Sign up now and join our community on Discord. We can’t wait to see what you build!

Subscribe to our blog

Join our community of subscribers to stay up to date with the latest news, tips and thought leadership, delivered directly to your inbox.